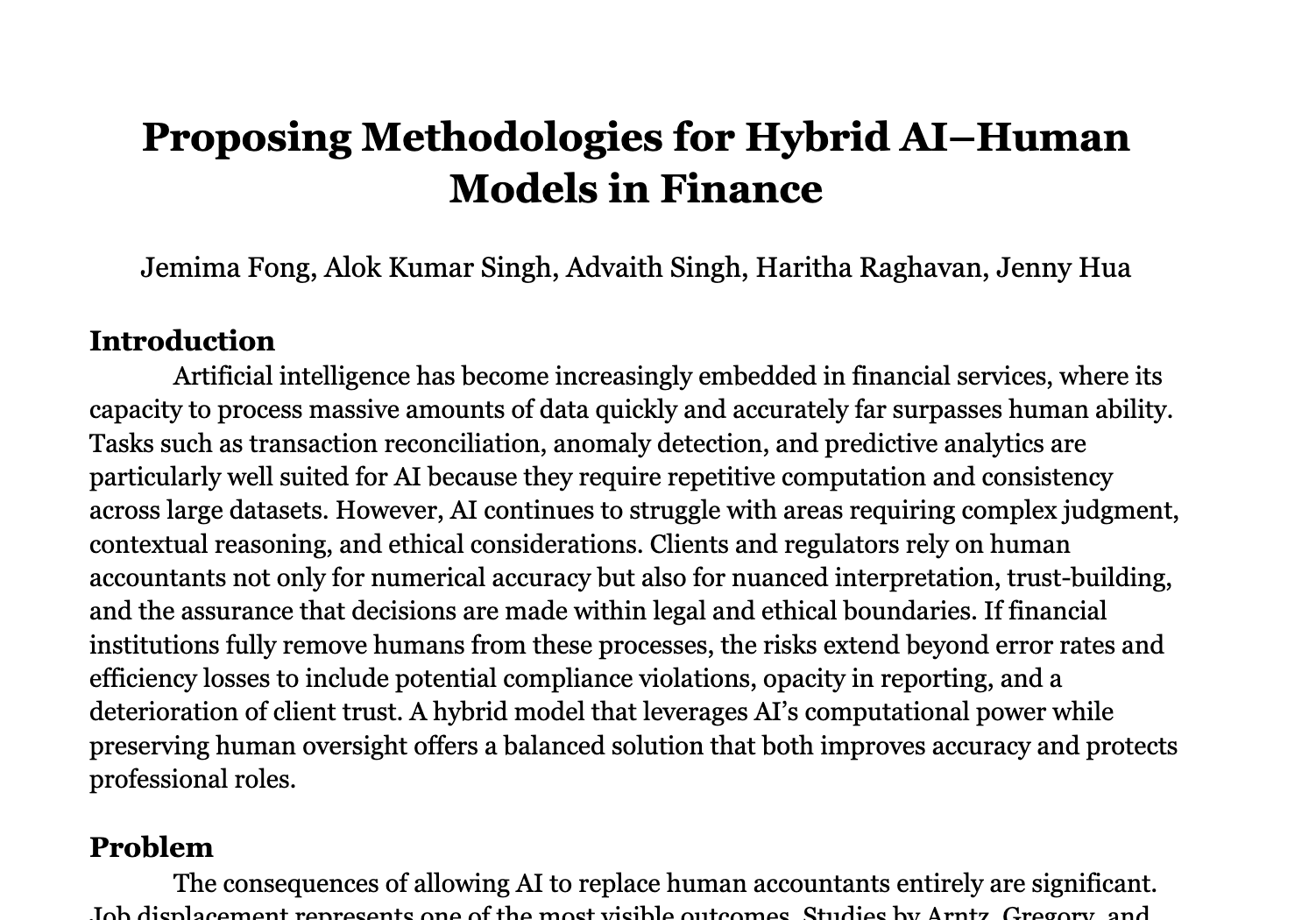

AI Prevention and Prediction of Type 2 Diabetes Research Paper

A comprehensive research paper examining how artificial intelligence can be applied to predict and prevent type 2 diabetes by analyzing diverse datasets including genetics, EHRs, and wearables.

Description

This paper surveys AI models and algorithms (from logistic regression to deep neural networks) for diabetes risk prediction, highlights real-world deployments by organizations like Google Health, IBM Watson, and Medtronic, and compares AI’s accuracy against traditional screening tools. It also addresses ethical concerns such as data bias, algorithmic discrimination, and patient privacy, while proposing multimodal approaches to improve predictive validity. The study concludes that AI offers transformative potential for early detection and prevention of type 2 diabetes, though challenges remain in generalizability, fairness, and transparency.